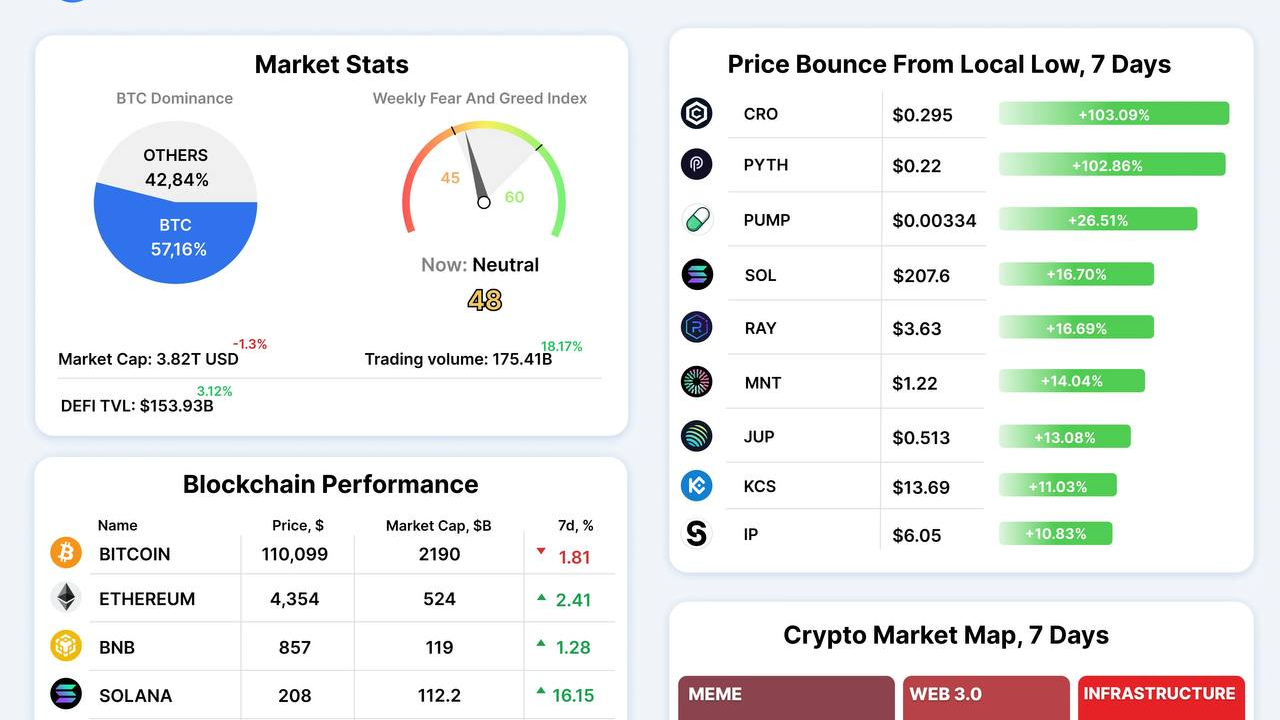

1️⃣Stripe and Paradigm launch new L1 chain

◻️Stripe and Paradigm investment fund cooperate to introduce layer-1 blockchain called Tempo, specifically designed for payment purposes.

2️⃣Mega Matrix files with SEC for stablecoin governance token treasury strategy

◻️Mega Matrix (MPU) has filed a $2 billion shelf registration with the SEC to conduct a treasury strategy focused on stablecoin governance tokens - especially Ethena's ENA token

3️⃣Ukraine officially votes in favor of legalizing and taxing crypto

◻️The Ukrainian parliament has voted with a result of 246/321 votes in favor, moving towards legalizing and taxing the crypto market.

4️⃣World Liberty blacklists Justin Sun's wallet address

◻️World Liberty has frozen Justin Sun's address, locking billions of dollars in WLFI tokens after $9 million was transferred to exchanges, although Sun denies selling.

5️⃣19:30 tonight, the US releases non-farm payroll data for August:

◻️Unemployment rate expected at 4.3% (previously 4.2%).

◻️New jobs (Non-farm) forecast to increase by 75,000 (previously 73,000)

◻️This information will have a strong impact on Fed expectations and the risk asset market, especially crypto

🔔 Connect with JuCoin now to not miss hot news about Crypto, financial policy and global geopolitics!

#JuCoin #CryptoNews #Stripe #Paradigm #MegaMatrix #ENAtoken #Stablecoin #DeFi #NFP #Ukraine

Lee Jucoin

2025-09-05 12:45

📰Crypto News 24h With #JuCoin! (05/09/2025)

Disclaimer:Contains third-party content. Not financial advice.

See Terms and Conditions.

What Is the FATF Travel Rule and How Does It Impact Cryptocurrency and Money Transfers?

The FATF Travel Rule is a significant regulation shaping how cross-border financial transactions, especially those involving cryptocurrencies, are conducted worldwide. As digital assets become more integrated into global finance, understanding this rule’s implications is crucial for both service providers and users.

Understanding the FATF Travel Rule

The Financial Action Task Force (FATF) introduced the Travel Rule in 1996 as part of its efforts to combat money laundering and terrorist financing. Originally designed for traditional banking systems, it mandated that financial institutions collect and verify information about both parties involved in a transaction exceeding a specific threshold—typically $1,000 or more. This information includes names, addresses, account numbers, and other identifying details.

In 2019, recognizing the rise of digital assets like cryptocurrencies, the FATF expanded its scope to include virtual asset service providers (VASPs). This update aimed to bring transparency to crypto transactions by applying similar standards used in conventional finance. The goal was to prevent illicit activities such as money laundering through untraceable transfers.

Why Was the Travel Rule Introduced?

The primary purpose of the Travel Rule is enhancing transparency across borders. By requiring detailed information exchange between institutions handling transactions—whether banks or crypto exchanges—it becomes harder for criminals to move illicit funds undetected. For governments worldwide, this regulation supports international cooperation against financial crimes.

For cryptocurrency markets specifically, implementing these rules helps legitimize digital assets within regulatory frameworks. It provides clarity on compliance expectations for exchanges and wallet providers operating across jurisdictions.

How Does the Travel Rule Affect Cryptocurrency Transactions?

Applying traditional AML/CFT standards like the Travel Rule presents unique challenges within decentralized digital ecosystems:

- Regulatory Clarity: The rule offers clearer guidelines for crypto exchanges on what data they need to collect from users when facilitating cross-border transfers.

- Compliance Challenges: Unlike banks with centralized customer data systems, many cryptocurrency platforms operate with pseudonymous addresses that do not inherently link back easily to real-world identities.

- Technological Solutions: To bridge this gap, industry players are developing tools such as Know Your Customer (KYC) protocols integrated into their platforms or third-party compliance solutions that facilitate secure data sharing while respecting privacy concerns.

Despite these efforts, some smaller or decentralized services struggle with full compliance due to resource constraints or technical limitations—potentially leading them out of certain markets if they cannot meet regulatory requirements.

Impact on Money Transfer Services

Traditional money transfer services have long relied on KYC procedures; however, integrating these processes globally under the auspices of FATF guidance aims at creating uniformity:

- Enhanced Security: Collecting verified sender/receiver data reduces risks associated with frauds and illegal activities.

- Operational Costs: Implementing comprehensive compliance measures increases operational expenses for financial institutions—and these costs may be passed onto consumers through higher fees.

- Global Cooperation: Standardized AML/CFT practices foster better international collaboration among regulators by establishing common standards for cross-border transfers.

This harmonization aims at making illicit fund movement more difficult while streamlining legitimate international commerce.

Recent Developments in Regulatory Guidance

In June 2023, FATF issued updated guidance focusing specifically on digital assets' travel rules implementation. This new advice emphasizes robust customer due diligence processes alongside leveraging advanced technologies like blockchain analytics tools that can trace transaction flows without compromising user privacy excessively.

Many cryptocurrency exchanges have responded proactively by upgrading their KYC/AML systems or partnering with specialized firms offering compliant solutions tailored toward meeting evolving regulations efficiently. These adaptations aim at balancing regulatory adherence with user experience considerations—a key factor in fostering broader adoption of compliant crypto services.

Challenges Facing Industry Stakeholders

While regulations improve overall security and legitimacy within crypto markets:

- Critics argue there’s potential overreach—regulations might stifle innovation if overly burdensome.

- Privacy advocates express concern over increased personal data collection which could compromise user anonymity—a core appeal of cryptocurrencies.

- Smaller operators face operational hurdles; some may exit certain regions if compliance costs outweigh benefits or if they lack necessary infrastructure support.

Balancing effective regulation without hindering technological progress remains an ongoing debate among policymakers and industry leaders alike.

How Will Future Regulations Shape Cross-Border Crypto Transactions?

As global regulators continue refining policies around cryptocurrencies under frameworks like those set by FATF's updated guidance from June 2023 onwards—the landscape will likely see increased standardization but also heightened scrutiny regarding privacy rights versus security needs.

Emerging technological innovations such as decentralized identity verification methods could help reconcile these competing interests by enabling secure yet private verification processes aligned with travel rule requirements.

Final Thoughts

The FATF Travel Rule marks a pivotal step toward integrating cryptocurrencies into mainstream financial oversight mechanisms globally. While it introduces notable compliance challenges—particularly around privacy concerns—it also offers opportunities for greater legitimacy and cooperation across borders in fighting financial crimes effectively. As technology evolves alongside regulation updates from bodies like FATF—including recent guidance issued mid-2023—the industry must adapt swiftly while safeguarding user rights amid increasing demands for transparency.

By understanding what the FATF Travel Rule entails—and how it influences both traditional money transfer services and emerging crypto markets—you can better navigate this complex regulatory environment.

kai

2025-05-22 12:14

What is the FATF Travel Rule, and how does it impact transfers?

What Is the FATF Travel Rule and How Does It Impact Cryptocurrency and Money Transfers?

The FATF Travel Rule is a significant regulation shaping how cross-border financial transactions, especially those involving cryptocurrencies, are conducted worldwide. As digital assets become more integrated into global finance, understanding this rule’s implications is crucial for both service providers and users.

Understanding the FATF Travel Rule

The Financial Action Task Force (FATF) introduced the Travel Rule in 1996 as part of its efforts to combat money laundering and terrorist financing. Originally designed for traditional banking systems, it mandated that financial institutions collect and verify information about both parties involved in a transaction exceeding a specific threshold—typically $1,000 or more. This information includes names, addresses, account numbers, and other identifying details.

In 2019, recognizing the rise of digital assets like cryptocurrencies, the FATF expanded its scope to include virtual asset service providers (VASPs). This update aimed to bring transparency to crypto transactions by applying similar standards used in conventional finance. The goal was to prevent illicit activities such as money laundering through untraceable transfers.

Why Was the Travel Rule Introduced?

The primary purpose of the Travel Rule is enhancing transparency across borders. By requiring detailed information exchange between institutions handling transactions—whether banks or crypto exchanges—it becomes harder for criminals to move illicit funds undetected. For governments worldwide, this regulation supports international cooperation against financial crimes.

For cryptocurrency markets specifically, implementing these rules helps legitimize digital assets within regulatory frameworks. It provides clarity on compliance expectations for exchanges and wallet providers operating across jurisdictions.

How Does the Travel Rule Affect Cryptocurrency Transactions?

Applying traditional AML/CFT standards like the Travel Rule presents unique challenges within decentralized digital ecosystems:

- Regulatory Clarity: The rule offers clearer guidelines for crypto exchanges on what data they need to collect from users when facilitating cross-border transfers.

- Compliance Challenges: Unlike banks with centralized customer data systems, many cryptocurrency platforms operate with pseudonymous addresses that do not inherently link back easily to real-world identities.

- Technological Solutions: To bridge this gap, industry players are developing tools such as Know Your Customer (KYC) protocols integrated into their platforms or third-party compliance solutions that facilitate secure data sharing while respecting privacy concerns.

Despite these efforts, some smaller or decentralized services struggle with full compliance due to resource constraints or technical limitations—potentially leading them out of certain markets if they cannot meet regulatory requirements.

Impact on Money Transfer Services

Traditional money transfer services have long relied on KYC procedures; however, integrating these processes globally under the auspices of FATF guidance aims at creating uniformity:

- Enhanced Security: Collecting verified sender/receiver data reduces risks associated with frauds and illegal activities.

- Operational Costs: Implementing comprehensive compliance measures increases operational expenses for financial institutions—and these costs may be passed onto consumers through higher fees.

- Global Cooperation: Standardized AML/CFT practices foster better international collaboration among regulators by establishing common standards for cross-border transfers.

This harmonization aims at making illicit fund movement more difficult while streamlining legitimate international commerce.

Recent Developments in Regulatory Guidance

In June 2023, FATF issued updated guidance focusing specifically on digital assets' travel rules implementation. This new advice emphasizes robust customer due diligence processes alongside leveraging advanced technologies like blockchain analytics tools that can trace transaction flows without compromising user privacy excessively.

Many cryptocurrency exchanges have responded proactively by upgrading their KYC/AML systems or partnering with specialized firms offering compliant solutions tailored toward meeting evolving regulations efficiently. These adaptations aim at balancing regulatory adherence with user experience considerations—a key factor in fostering broader adoption of compliant crypto services.

Challenges Facing Industry Stakeholders

While regulations improve overall security and legitimacy within crypto markets:

- Critics argue there’s potential overreach—regulations might stifle innovation if overly burdensome.

- Privacy advocates express concern over increased personal data collection which could compromise user anonymity—a core appeal of cryptocurrencies.

- Smaller operators face operational hurdles; some may exit certain regions if compliance costs outweigh benefits or if they lack necessary infrastructure support.

Balancing effective regulation without hindering technological progress remains an ongoing debate among policymakers and industry leaders alike.

How Will Future Regulations Shape Cross-Border Crypto Transactions?

As global regulators continue refining policies around cryptocurrencies under frameworks like those set by FATF's updated guidance from June 2023 onwards—the landscape will likely see increased standardization but also heightened scrutiny regarding privacy rights versus security needs.

Emerging technological innovations such as decentralized identity verification methods could help reconcile these competing interests by enabling secure yet private verification processes aligned with travel rule requirements.

Final Thoughts

The FATF Travel Rule marks a pivotal step toward integrating cryptocurrencies into mainstream financial oversight mechanisms globally. While it introduces notable compliance challenges—particularly around privacy concerns—it also offers opportunities for greater legitimacy and cooperation across borders in fighting financial crimes effectively. As technology evolves alongside regulation updates from bodies like FATF—including recent guidance issued mid-2023—the industry must adapt swiftly while safeguarding user rights amid increasing demands for transparency.

By understanding what the FATF Travel Rule entails—and how it influences both traditional money transfer services and emerging crypto markets—you can better navigate this complex regulatory environment.

Disclaimer:Contains third-party content. Not financial advice.

See Terms and Conditions.

What Is Liquidity Mining in the DeFi Ecosystem?

Liquidity mining is a fundamental concept within the decentralized finance (DeFi) landscape that has significantly contributed to its rapid growth. It involves incentivizing users to supply liquidity—essentially, funds—to decentralized exchanges (DEXs) and other financial protocols. This process not only enhances the trading experience by reducing slippage but also fosters community participation and decentralization.

Understanding Liquidity Mining: How Does It Work?

At its core, liquidity mining encourages users to deposit their cryptocurrencies into liquidity pools on DeFi platforms. These pools are used to facilitate trading, lending, or other financial activities without relying on centralized intermediaries. In return for providing this liquidity, participants earn rewards—often in the form of governance tokens or interest payments.

For example, when a user deposits ETH and USDT into a DEX like Uniswap or SushiSwap, they become a liquidity provider (LP). As trades occur within these pools, LPs earn transaction fees proportional to their share of the pool. Additionally, many protocols distribute native governance tokens as incentives—these tokens can grant voting rights and influence protocol development.

This mechanism aligns with DeFi's ethos of decentralization by allowing individual users rather than centralized entities to control significant parts of financial operations. It also helps improve market efficiency by increasing available liquidity for various assets.

The Evolution of Liquidity Mining in DeFi

Liquidity mining emerged as an innovative solution to traditional finance’s limitations regarding capital requirements and central control over markets. Unlike conventional market-making—which often requires substantial capital reserves—liquidity mining democratizes access by enabling anyone with crypto assets to participate actively.

In recent years, yield farming—a subset of liquidity mining—has gained popularity among crypto enthusiasts seeking higher returns. Yield farms allow users to deposit assets into specific pools managed across multiple protocols like Compound or Yearn.finance; these platforms then optimize yields through complex strategies involving staking and lending.

The rise of yield farming has led some investors to deploy large sums into DeFi projects rapidly but has also introduced new risks such as impermanent loss—the potential loss incurred when token prices fluctuate relative to each other—and smart contract vulnerabilities.

Key Benefits for Participants

Participating in liquidity mining offers several advantages:

- Earning Rewards: Users receive governance tokens that can be held or traded; these often appreciate over time if the platform succeeds.

- Interest Income: Some protocols provide interest payments similar to traditional savings accounts.

- Influence Over Protocol Development: Governance tokens enable holders voting rights on key decisions affecting platform upgrades or fee structures.

- Supporting Decentralization: By contributing funds directly into protocols, LPs help maintain open-access markets free from central authority control.

However, it’s essential for participants always considering risks such as token volatility and smart contract security issues before engaging extensively in liquidity mining activities.

Challenges Facing Liquidity Mining

While lucrative opportunities exist within DeFi’s ecosystem through liquidity mining practices, several challenges threaten sustainability:

Market Volatility

Governance tokens earned via liquidity provision tend to be highly volatile due to fluctuating cryptocurrency prices and market sentiment shifts. This volatility can diminish long-term profitability if token values decline sharply after initial rewards are earned.

Regulatory Risks

As authorities worldwide scrutinize DeFi activities more closely—including yield farming—they may impose regulations that restrict certain operations or classify some tokens as securities. Such regulatory uncertainty could impact user participation levels significantly.

Security Concerns

Smart contract exploits have been notable setbacks for many platforms; high-profile hacks like those targeting Ronin Network highlight vulnerabilities inherent in complex codebases lacking thorough audits. These incidents erode trust among participants and can lead to substantial financial losses if exploited maliciously.

Scalability Issues

As demand increases during periods of high activity within popular protocols like Aave or Curve Finance—the network congestion causes elevated transaction fees (gas costs) and slower processing times which hinder seamless user experiences especially during peak periods.

The Future Outlook: Opportunities & Risks

The ongoing transition from Ethereum 1.x towards Ethereum 2.0 aims at improving scalability through proof-of-stake consensus mechanisms which could make transactions faster while reducing costs—a positive development for liquid providers seeking efficiency gains amid growing demand.

Furthermore,

- Competition among different protocols continues driving innovation,

- New incentive models emerge regularly,

- Cross-chain integrations expand access across multiple blockchains,

all promising further growth avenues for liquid miners.

However,

regulatory developments remain unpredictable,security remains paramount with ongoing efforts toward better auditing practices,and scalability challenges must be addressed comprehensively before mass adoption becomes truly sustainable.

Final Thoughts on Liquidity Mining's Role in DeFi

Liquidity mining remains one of the most impactful innovations shaping decentralized finance today—it enables broader participation while fueling platform growth through incentivized asset provision. Its success hinges on balancing attractive rewards against inherent risks such as price volatility, security vulnerabilities, regulatory uncertainties—and ensuring robust infrastructure capable of handling increased activity efficiently.

As DeFi continues evolving rapidly—with technological advancements like layer-two solutions promising enhanced scalability—the landscape around liquidity provisioning will likely become more sophisticated yet safer for everyday investors seeking exposure beyond traditional banking systems.

By understanding how it works—and recognizing both its opportunities and pitfalls—participants can better navigate this dynamic environment while contributing meaningfully toward building resilient decentralized financial ecosystems rooted firmly in transparency and community-driven governance.

Keywords: Liquidity Mining , Decentralized Finance , Yield Farming , Crypto Rewards , Smart Contract Security , Blockchain Protocols , Governance Tokens , Market Volatility

kai

2025-05-22 08:10

What is "liquidity mining" within the DeFi ecosystem?

What Is Liquidity Mining in the DeFi Ecosystem?

Liquidity mining is a fundamental concept within the decentralized finance (DeFi) landscape that has significantly contributed to its rapid growth. It involves incentivizing users to supply liquidity—essentially, funds—to decentralized exchanges (DEXs) and other financial protocols. This process not only enhances the trading experience by reducing slippage but also fosters community participation and decentralization.

Understanding Liquidity Mining: How Does It Work?

At its core, liquidity mining encourages users to deposit their cryptocurrencies into liquidity pools on DeFi platforms. These pools are used to facilitate trading, lending, or other financial activities without relying on centralized intermediaries. In return for providing this liquidity, participants earn rewards—often in the form of governance tokens or interest payments.

For example, when a user deposits ETH and USDT into a DEX like Uniswap or SushiSwap, they become a liquidity provider (LP). As trades occur within these pools, LPs earn transaction fees proportional to their share of the pool. Additionally, many protocols distribute native governance tokens as incentives—these tokens can grant voting rights and influence protocol development.

This mechanism aligns with DeFi's ethos of decentralization by allowing individual users rather than centralized entities to control significant parts of financial operations. It also helps improve market efficiency by increasing available liquidity for various assets.

The Evolution of Liquidity Mining in DeFi

Liquidity mining emerged as an innovative solution to traditional finance’s limitations regarding capital requirements and central control over markets. Unlike conventional market-making—which often requires substantial capital reserves—liquidity mining democratizes access by enabling anyone with crypto assets to participate actively.

In recent years, yield farming—a subset of liquidity mining—has gained popularity among crypto enthusiasts seeking higher returns. Yield farms allow users to deposit assets into specific pools managed across multiple protocols like Compound or Yearn.finance; these platforms then optimize yields through complex strategies involving staking and lending.

The rise of yield farming has led some investors to deploy large sums into DeFi projects rapidly but has also introduced new risks such as impermanent loss—the potential loss incurred when token prices fluctuate relative to each other—and smart contract vulnerabilities.

Key Benefits for Participants

Participating in liquidity mining offers several advantages:

- Earning Rewards: Users receive governance tokens that can be held or traded; these often appreciate over time if the platform succeeds.

- Interest Income: Some protocols provide interest payments similar to traditional savings accounts.

- Influence Over Protocol Development: Governance tokens enable holders voting rights on key decisions affecting platform upgrades or fee structures.

- Supporting Decentralization: By contributing funds directly into protocols, LPs help maintain open-access markets free from central authority control.

However, it’s essential for participants always considering risks such as token volatility and smart contract security issues before engaging extensively in liquidity mining activities.

Challenges Facing Liquidity Mining

While lucrative opportunities exist within DeFi’s ecosystem through liquidity mining practices, several challenges threaten sustainability:

Market Volatility

Governance tokens earned via liquidity provision tend to be highly volatile due to fluctuating cryptocurrency prices and market sentiment shifts. This volatility can diminish long-term profitability if token values decline sharply after initial rewards are earned.

Regulatory Risks

As authorities worldwide scrutinize DeFi activities more closely—including yield farming—they may impose regulations that restrict certain operations or classify some tokens as securities. Such regulatory uncertainty could impact user participation levels significantly.

Security Concerns

Smart contract exploits have been notable setbacks for many platforms; high-profile hacks like those targeting Ronin Network highlight vulnerabilities inherent in complex codebases lacking thorough audits. These incidents erode trust among participants and can lead to substantial financial losses if exploited maliciously.

Scalability Issues

As demand increases during periods of high activity within popular protocols like Aave or Curve Finance—the network congestion causes elevated transaction fees (gas costs) and slower processing times which hinder seamless user experiences especially during peak periods.

The Future Outlook: Opportunities & Risks

The ongoing transition from Ethereum 1.x towards Ethereum 2.0 aims at improving scalability through proof-of-stake consensus mechanisms which could make transactions faster while reducing costs—a positive development for liquid providers seeking efficiency gains amid growing demand.

Furthermore,

- Competition among different protocols continues driving innovation,

- New incentive models emerge regularly,

- Cross-chain integrations expand access across multiple blockchains,

all promising further growth avenues for liquid miners.

However,

regulatory developments remain unpredictable,security remains paramount with ongoing efforts toward better auditing practices,and scalability challenges must be addressed comprehensively before mass adoption becomes truly sustainable.

Final Thoughts on Liquidity Mining's Role in DeFi

Liquidity mining remains one of the most impactful innovations shaping decentralized finance today—it enables broader participation while fueling platform growth through incentivized asset provision. Its success hinges on balancing attractive rewards against inherent risks such as price volatility, security vulnerabilities, regulatory uncertainties—and ensuring robust infrastructure capable of handling increased activity efficiently.

As DeFi continues evolving rapidly—with technological advancements like layer-two solutions promising enhanced scalability—the landscape around liquidity provisioning will likely become more sophisticated yet safer for everyday investors seeking exposure beyond traditional banking systems.

By understanding how it works—and recognizing both its opportunities and pitfalls—participants can better navigate this dynamic environment while contributing meaningfully toward building resilient decentralized financial ecosystems rooted firmly in transparency and community-driven governance.

Keywords: Liquidity Mining , Decentralized Finance , Yield Farming , Crypto Rewards , Smart Contract Security , Blockchain Protocols , Governance Tokens , Market Volatility

Disclaimer:Contains third-party content. Not financial advice.

See Terms and Conditions.

What Is Key Management Best Practice?

Understanding the Fundamentals of Key Management

Key management is a cornerstone of cybersecurity, especially in cryptographic systems that safeguard sensitive data. It encompasses the entire lifecycle of cryptographic keys—from their creation to their eventual disposal. Proper key management ensures that data remains confidential, authentic, and unaltered during storage and transmission. Without robust practices, even the strongest encryption algorithms can be rendered ineffective if keys are mishandled or compromised.

Secure Key Generation: The First Line of Defense

The foundation of effective key management begins with secure key generation. Using high-quality random number generators (RNGs) is essential to produce unpredictable and uniformly distributed cryptographic keys. This randomness prevents attackers from predicting or reproducing keys through brute-force methods. Industry standards like NIST SP 800-90B recommend deterministic random bit generators (DRBGs) for generating cryptographically secure keys, ensuring they are resilient against attacks.

Key Distribution: Safeguarding Data During Exchange

Once generated, keys must be securely shared between parties without interception by malicious actors. Implementing secure key exchange protocols such as Diffie-Hellman or Elliptic Curve Diffie-Hellman (ECDH) provides a reliable method for establishing shared secrets over insecure channels. These protocols enable two parties to agree on a common secret without transmitting the actual key material openly—reducing risks associated with eavesdropping and man-in-the-middle attacks.

Secure Storage Solutions: Protecting Keys at Rest

Storing cryptographic keys securely is equally vital as generating and exchanging them safely. Hardware Security Modules (HSMs) and Trusted Platform Modules (TPMs) offer tamper-resistant environments designed specifically for safeguarding sensitive keys. These hardware solutions provide features like tamper-evidence and tamper-resistance, making unauthorized access significantly more difficult compared to software-based storage options.

Effective Key Revocation Strategies

Keys should not remain valid indefinitely; they need periodic revocation when compromised or expired to prevent unauthorized access over time. Implementing mechanisms such as Certificate Revocation Lists (CRLs) or Online Certificate Status Protocol (OCSP) allows organizations to revoke compromised or outdated certificates promptly. Regularly updating revocation lists ensures that systems do not rely on invalid credentials, maintaining overall security integrity.

Compliance with Industry Regulations

Adhering to industry-specific regulations is crucial for organizations handling sensitive information across sectors like finance, healthcare, and government agencies. Standards such as PCI-DSS for payment card security, HIPAA for healthcare data privacy, and GDPR for data protection in Europe set clear guidelines on how encryption keys should be managed throughout their lifecycle—covering aspects from generation to destruction.

Recent Advances Shaping Key Management Practices

Emerging technologies are transforming traditional approaches toward more resilient security frameworks:

Quantum Computing Threats: The rise of quantum computers presents significant challenges by potentially breaking widely used encryption schemes like RSA and elliptic curve cryptography (ECC). To counter this threat, researchers advocate adopting post-quantum cryptography algorithms based on lattice structures or hash functions that resist quantum attacks.

AI-Powered Automation: Artificial intelligence enhances efficiency in managing large volumes of cryptographic operations—automating tasks such as key generation, distribution monitoring, anomaly detection during usage—and reducing human error risks.

Blockchain-Based Solutions: Decentralized blockchain platforms offer promising avenues for transparent and tamper-proof key management systems capable of resisting manipulation attempts while providing auditability across distributed networks.

Risks Associated with Poor Key Management

Neglecting best practices can lead directly to severe consequences:

Security Breaches: Inadequate protection may allow hackers access to encrypted data through stolen or weakly stored keys.

Regulatory Penalties: Non-compliance with standards like GDPR can result in hefty fines alongside reputational damage.

Technological Vulnerabilities: Failing to adapt strategies amid rapid technological changes increases exposure risk—especially considering advancements in quantum computing which threaten existing encryption methods.

Implementing Best Practices Across Industries

Organizations should adopt comprehensive policies aligned with recognized standards:

- Use strong RNGs compliant with NIST guidelines during key creation.

- Employ secure protocols like ECDH during distribution phases.

- Store all critical keys within HSMs/TPMs rather than unsecured servers.

- Establish clear procedures for timely revocation using CRLs/OCSP.5.. Ensure compliance by regularly auditing processes against relevant regulations such as PCI-DSS or GDPR.

Staying Ahead With Emerging Technologies

To future-proof their security infrastructure:

Invest in research into quantum-resistant algorithms before widespread adoption becomes necessary.

Leverage AI tools cautiously—balancing automation benefits against potential new attack vectors introduced via machine learning models.

Explore blockchain solutions carefully considering scalability issues but recognizing their potential benefits in transparency and decentralization.

Final Thoughts on Effective Key Management

Robust key management practices form the backbone of any organization’s cybersecurity strategy involving encryption technologies today—and tomorrow’s emerging threats make it even more critical to stay vigilant about evolving best practices . By prioritizing secure generation methods , protected storage environments , diligent revocation procedures , regulatory compliance , along with embracing technological innovations responsibly — businesses can significantly reduce risks associated with data breaches while enhancing trustworthiness among clients and partners alike .

Keywords: Cryptographic Keys | Data Security | Secure Storage | Encryption Best Practices | Quantum Resistance | AI Security Tools | Blockchain Security

JCUSER-F1IIaxXA

2025-05-15 01:34

What is key management best practice?

What Is Key Management Best Practice?

Understanding the Fundamentals of Key Management

Key management is a cornerstone of cybersecurity, especially in cryptographic systems that safeguard sensitive data. It encompasses the entire lifecycle of cryptographic keys—from their creation to their eventual disposal. Proper key management ensures that data remains confidential, authentic, and unaltered during storage and transmission. Without robust practices, even the strongest encryption algorithms can be rendered ineffective if keys are mishandled or compromised.

Secure Key Generation: The First Line of Defense

The foundation of effective key management begins with secure key generation. Using high-quality random number generators (RNGs) is essential to produce unpredictable and uniformly distributed cryptographic keys. This randomness prevents attackers from predicting or reproducing keys through brute-force methods. Industry standards like NIST SP 800-90B recommend deterministic random bit generators (DRBGs) for generating cryptographically secure keys, ensuring they are resilient against attacks.

Key Distribution: Safeguarding Data During Exchange

Once generated, keys must be securely shared between parties without interception by malicious actors. Implementing secure key exchange protocols such as Diffie-Hellman or Elliptic Curve Diffie-Hellman (ECDH) provides a reliable method for establishing shared secrets over insecure channels. These protocols enable two parties to agree on a common secret without transmitting the actual key material openly—reducing risks associated with eavesdropping and man-in-the-middle attacks.

Secure Storage Solutions: Protecting Keys at Rest

Storing cryptographic keys securely is equally vital as generating and exchanging them safely. Hardware Security Modules (HSMs) and Trusted Platform Modules (TPMs) offer tamper-resistant environments designed specifically for safeguarding sensitive keys. These hardware solutions provide features like tamper-evidence and tamper-resistance, making unauthorized access significantly more difficult compared to software-based storage options.

Effective Key Revocation Strategies

Keys should not remain valid indefinitely; they need periodic revocation when compromised or expired to prevent unauthorized access over time. Implementing mechanisms such as Certificate Revocation Lists (CRLs) or Online Certificate Status Protocol (OCSP) allows organizations to revoke compromised or outdated certificates promptly. Regularly updating revocation lists ensures that systems do not rely on invalid credentials, maintaining overall security integrity.

Compliance with Industry Regulations

Adhering to industry-specific regulations is crucial for organizations handling sensitive information across sectors like finance, healthcare, and government agencies. Standards such as PCI-DSS for payment card security, HIPAA for healthcare data privacy, and GDPR for data protection in Europe set clear guidelines on how encryption keys should be managed throughout their lifecycle—covering aspects from generation to destruction.

Recent Advances Shaping Key Management Practices

Emerging technologies are transforming traditional approaches toward more resilient security frameworks:

Quantum Computing Threats: The rise of quantum computers presents significant challenges by potentially breaking widely used encryption schemes like RSA and elliptic curve cryptography (ECC). To counter this threat, researchers advocate adopting post-quantum cryptography algorithms based on lattice structures or hash functions that resist quantum attacks.

AI-Powered Automation: Artificial intelligence enhances efficiency in managing large volumes of cryptographic operations—automating tasks such as key generation, distribution monitoring, anomaly detection during usage—and reducing human error risks.

Blockchain-Based Solutions: Decentralized blockchain platforms offer promising avenues for transparent and tamper-proof key management systems capable of resisting manipulation attempts while providing auditability across distributed networks.

Risks Associated with Poor Key Management

Neglecting best practices can lead directly to severe consequences:

Security Breaches: Inadequate protection may allow hackers access to encrypted data through stolen or weakly stored keys.

Regulatory Penalties: Non-compliance with standards like GDPR can result in hefty fines alongside reputational damage.

Technological Vulnerabilities: Failing to adapt strategies amid rapid technological changes increases exposure risk—especially considering advancements in quantum computing which threaten existing encryption methods.

Implementing Best Practices Across Industries

Organizations should adopt comprehensive policies aligned with recognized standards:

- Use strong RNGs compliant with NIST guidelines during key creation.

- Employ secure protocols like ECDH during distribution phases.

- Store all critical keys within HSMs/TPMs rather than unsecured servers.

- Establish clear procedures for timely revocation using CRLs/OCSP.5.. Ensure compliance by regularly auditing processes against relevant regulations such as PCI-DSS or GDPR.

Staying Ahead With Emerging Technologies

To future-proof their security infrastructure:

Invest in research into quantum-resistant algorithms before widespread adoption becomes necessary.

Leverage AI tools cautiously—balancing automation benefits against potential new attack vectors introduced via machine learning models.

Explore blockchain solutions carefully considering scalability issues but recognizing their potential benefits in transparency and decentralization.

Final Thoughts on Effective Key Management

Robust key management practices form the backbone of any organization’s cybersecurity strategy involving encryption technologies today—and tomorrow’s emerging threats make it even more critical to stay vigilant about evolving best practices . By prioritizing secure generation methods , protected storage environments , diligent revocation procedures , regulatory compliance , along with embracing technological innovations responsibly — businesses can significantly reduce risks associated with data breaches while enhancing trustworthiness among clients and partners alike .

Keywords: Cryptographic Keys | Data Security | Secure Storage | Encryption Best Practices | Quantum Resistance | AI Security Tools | Blockchain Security

Disclaimer:Contains third-party content. Not financial advice.

See Terms and Conditions.

Proposals to Upgrade the Dogecoin (DOGE) Consensus Mechanism

Dogecoin (DOGE) has established itself as a popular cryptocurrency largely due to its vibrant community and meme-inspired branding. As with all blockchain networks, maintaining a secure, scalable, and energy-efficient consensus mechanism is vital for its long-term viability. Over recent months, discussions within the Dogecoin community have centered around potential upgrades to its current Proof of Work (PoW) system. This article explores the main proposals under consideration, their implications, and what they could mean for DOGE’s future.

Understanding Dogecoin’s Current Proof of Work System

Dogecoin operates on a PoW consensus mechanism similar to Bitcoin’s. Miners solve complex mathematical puzzles to validate transactions and add new blocks to the blockchain. While this method has proven effective in securing decentralization and network integrity over time, it comes with notable drawbacks—most prominently high energy consumption and scalability challenges.

PoW requires significant computational power, which translates into substantial electricity use—a concern increasingly scrutinized amid global efforts toward sustainability. Additionally, as transaction volumes grow, network speed can become a bottleneck without further protocol adjustments.

Main Proposals for Upgrading Dogecoin’s Consensus Mechanism

Given these limitations, several proposals have emerged within the community aiming to modernize or diversify how DOGE achieves consensus:

Transitioning from Proof of Work to Proof of Stake (PoS)

One prominent idea is shifting from PoW towards a PoS model. In PoS systems, validators are chosen based on the amount of coins they stake rather than solving puzzles through computational work. This change could significantly reduce energy consumption while potentially increasing transaction throughput.

However, transitioning from PoW to PoS involves complex technical modifications that would require extensive development work and careful planning—especially considering Dogecoin's existing infrastructure built around mining-based validation. Critics also raise concerns about security; some argue that PoS may be more vulnerable if not properly implemented because it relies heavily on coin ownership rather than computational effort.

Leased Proof of Stake (LPoS)

Leased Proof of Stake is an alternative variant designed primarily for enhanced flexibility and decentralization. In LPoS models like those used in other cryptocurrencies such as Waves or Tron networks—users lease their coins temporarily to validators without transferring ownership directly—allowing smaller holders participation in validation processes.

For DOGE enthusiasts considering this approach: LPoS offers an attractive middle ground by enabling broader validator participation without requiring large upfront stakes or technical expertise associated with traditional staking setups.

While still in discussion phases at present—and lacking formal implementation plans—the concept holds promise for balancing security with inclusivity if adopted carefully.

Hybrid Consensus Models

Another avenue being explored involves hybrid systems combining elements from both PoW and PoS mechanisms—or even other algorithms—to leverage their respective strengths while mitigating weaknesses like high energy use or centralization risks.

A hybrid approach might see DOGE retain some aspects of mining-based validation but incorporate staking components that improve efficiency or security features such as resistance against 51% attacks—a common concern among critics wary of single points-of-failure within purely one-mechanism systems.

Implementing such models would demand rigorous testing phases before deployment but could ultimately provide a balanced solution aligned with evolving industry standards.

Recent Developments & Community Engagement

The ongoing debate about upgrading Dogecoin's consensus protocol reflects active engagement across multiple channels—including online forums like Reddit and Twitter—as well as developer meetings dedicated specifically to this topic. Community members are sharing ideas openly; some propose incremental changes while others advocate comprehensive overhauls aligned with broader industry trends toward sustainable blockchain solutions.

Developers have contributed by analyzing feasibility studies related to these proposals—testing prototypes where possible—and gathering feedback from users worldwide who remain invested in DOGE's future stability.

Challenges & Risks Associated With Upgrades

Any significant change carries inherent risks:

- Community Split: Major protocol modifications might divide supporters into factions favoring current versus proposed systems.

- Security Concerns: Transition periods can introduce vulnerabilities if not managed meticulously.

- Regulatory Considerations: Depending on how upgrades are implemented—for example: changing staking rules—they may attract regulatory scrutiny related especially to securities laws or anti-money laundering measures.

Furthermore, ensuring backward compatibility during upgrades is crucial so existing users experience minimal disruption.

The Path Forward for Dogecoin

Upgrading Dogecoin’s consensus mechanism presents both opportunities and challenges rooted deeply in technical feasibility alongside community sentiment. While proposals like moving toward proof-of-stake variants or hybrid models aim at making DOGE more sustainable amid environmental concerns—and possibly improving scalability—they require careful planning backed by thorough testing phases before any live deployment occurs.

As developments continue unfolding through active discussions among developers and stakeholders worldwide—with transparency being key—the future trajectory will depend heavily on balancing innovation with security assurances that uphold user trust.

Staying informed about these ongoing debates helps investors, developers, and enthusiasts understand how one of crypto's most beloved meme coins aims not just at maintaining relevance but also adapting responsibly amidst rapid technological evolution within blockchain ecosystems.

JCUSER-IC8sJL1q

2025-05-11 08:47

What proposals exist to upgrade the Dogecoin (DOGE) consensus mechanism?

Proposals to Upgrade the Dogecoin (DOGE) Consensus Mechanism

Dogecoin (DOGE) has established itself as a popular cryptocurrency largely due to its vibrant community and meme-inspired branding. As with all blockchain networks, maintaining a secure, scalable, and energy-efficient consensus mechanism is vital for its long-term viability. Over recent months, discussions within the Dogecoin community have centered around potential upgrades to its current Proof of Work (PoW) system. This article explores the main proposals under consideration, their implications, and what they could mean for DOGE’s future.

Understanding Dogecoin’s Current Proof of Work System

Dogecoin operates on a PoW consensus mechanism similar to Bitcoin’s. Miners solve complex mathematical puzzles to validate transactions and add new blocks to the blockchain. While this method has proven effective in securing decentralization and network integrity over time, it comes with notable drawbacks—most prominently high energy consumption and scalability challenges.

PoW requires significant computational power, which translates into substantial electricity use—a concern increasingly scrutinized amid global efforts toward sustainability. Additionally, as transaction volumes grow, network speed can become a bottleneck without further protocol adjustments.

Main Proposals for Upgrading Dogecoin’s Consensus Mechanism

Given these limitations, several proposals have emerged within the community aiming to modernize or diversify how DOGE achieves consensus:

Transitioning from Proof of Work to Proof of Stake (PoS)

One prominent idea is shifting from PoW towards a PoS model. In PoS systems, validators are chosen based on the amount of coins they stake rather than solving puzzles through computational work. This change could significantly reduce energy consumption while potentially increasing transaction throughput.

However, transitioning from PoW to PoS involves complex technical modifications that would require extensive development work and careful planning—especially considering Dogecoin's existing infrastructure built around mining-based validation. Critics also raise concerns about security; some argue that PoS may be more vulnerable if not properly implemented because it relies heavily on coin ownership rather than computational effort.

Leased Proof of Stake (LPoS)

Leased Proof of Stake is an alternative variant designed primarily for enhanced flexibility and decentralization. In LPoS models like those used in other cryptocurrencies such as Waves or Tron networks—users lease their coins temporarily to validators without transferring ownership directly—allowing smaller holders participation in validation processes.

For DOGE enthusiasts considering this approach: LPoS offers an attractive middle ground by enabling broader validator participation without requiring large upfront stakes or technical expertise associated with traditional staking setups.

While still in discussion phases at present—and lacking formal implementation plans—the concept holds promise for balancing security with inclusivity if adopted carefully.

Hybrid Consensus Models

Another avenue being explored involves hybrid systems combining elements from both PoW and PoS mechanisms—or even other algorithms—to leverage their respective strengths while mitigating weaknesses like high energy use or centralization risks.

A hybrid approach might see DOGE retain some aspects of mining-based validation but incorporate staking components that improve efficiency or security features such as resistance against 51% attacks—a common concern among critics wary of single points-of-failure within purely one-mechanism systems.

Implementing such models would demand rigorous testing phases before deployment but could ultimately provide a balanced solution aligned with evolving industry standards.

Recent Developments & Community Engagement

The ongoing debate about upgrading Dogecoin's consensus protocol reflects active engagement across multiple channels—including online forums like Reddit and Twitter—as well as developer meetings dedicated specifically to this topic. Community members are sharing ideas openly; some propose incremental changes while others advocate comprehensive overhauls aligned with broader industry trends toward sustainable blockchain solutions.

Developers have contributed by analyzing feasibility studies related to these proposals—testing prototypes where possible—and gathering feedback from users worldwide who remain invested in DOGE's future stability.

Challenges & Risks Associated With Upgrades

Any significant change carries inherent risks:

- Community Split: Major protocol modifications might divide supporters into factions favoring current versus proposed systems.

- Security Concerns: Transition periods can introduce vulnerabilities if not managed meticulously.

- Regulatory Considerations: Depending on how upgrades are implemented—for example: changing staking rules—they may attract regulatory scrutiny related especially to securities laws or anti-money laundering measures.

Furthermore, ensuring backward compatibility during upgrades is crucial so existing users experience minimal disruption.

The Path Forward for Dogecoin

Upgrading Dogecoin’s consensus mechanism presents both opportunities and challenges rooted deeply in technical feasibility alongside community sentiment. While proposals like moving toward proof-of-stake variants or hybrid models aim at making DOGE more sustainable amid environmental concerns—and possibly improving scalability—they require careful planning backed by thorough testing phases before any live deployment occurs.

As developments continue unfolding through active discussions among developers and stakeholders worldwide—with transparency being key—the future trajectory will depend heavily on balancing innovation with security assurances that uphold user trust.

Staying informed about these ongoing debates helps investors, developers, and enthusiasts understand how one of crypto's most beloved meme coins aims not just at maintaining relevance but also adapting responsibly amidst rapid technological evolution within blockchain ecosystems.

Disclaimer:Contains third-party content. Not financial advice.

See Terms and Conditions.

JioCoins

2025-08-29 18:26

Top 100 24h Gainers 🚀 M $0.5222

Disclaimer:Contains third-party content. Not financial advice.

See Terms and Conditions.

Upcoming Events for Degenerate Ape Owners: What to Expect in 2024

Overview of Degenerate Ape and Its Community Engagement

Degenerate Ape is a notable subset within the broader Bored Ape Yacht Club (BAYC) ecosystem, which has become one of the most influential NFT collections since its launch in April 2021. Known for their distinctive, often humorous designs, Degenerate Apes have cultivated a dedicated community of collectors and enthusiasts. These digital assets are more than just images; they represent membership in an active social network that regularly organizes events, collaborations, and investment discussions.

The community's engagement is evident through frequent online meetups on platforms like Discord and Twitter Spaces. These virtual gatherings serve as forums for sharing insights about market trends, upcoming projects, or simply celebrating new drops. Additionally, there have been several in-person meetups where owners can showcase their NFTs and connect face-to-face with fellow collectors—further strengthening community bonds.

Key Upcoming Events for Degenerate Ape Owners

While specific event dates are subject to change based on ongoing developments within the NFT space, several recurring themes highlight what Degenerate Ape owners can anticipate in 2024:

Community Meetups & Social Gatherings:

The community continues to prioritize real-world interactions through organized meetups across major cities worldwide. These events provide opportunities for networking, showcasing rare NFTs, and participating in live discussions about future trends.Collaborative Drops & Exclusive Content:

Yuga Labs frequently partners with brands such as Adidas or Sotheby’s to create exclusive content or limited-edition drops tailored specifically for BAYC members—including those holding Degenerate Apes. Expect upcoming collaborations that could include special merchandise releases or virtual experiences.NFT Art Exhibitions & Blockchain Conferences:

As part of broader industry events focused on blockchain technology and digital art innovation—such as NFT NYC or ETHGlobal—Degenerate Ape owners may find exclusive access or VIP sessions designed around their collection.Investment Seminars & Market Trend Discussions:

Given the volatile nature of NFTs and cryptocurrencies today, many community-led webinars focus on investment strategies amid fluctuating markets. These sessions aim to educate members about maximizing value while navigating regulatory uncertainties.

Strategic Partnerships Impacting Future Events

Yuga Labs’ ongoing collaborations significantly influence upcoming activities relevant to Degenerate Apes owners. Recent partnerships with high-profile brands like Adidas have led to unique digital collectibles and physical merchandise tied directly into the BAYC universe. Such alliances often translate into special events—virtual launches or offline exhibitions—that enhance member engagement while expanding brand visibility.

Furthermore, Yuga Labs’ involvement with major auction houses like Sotheby’s has opened avenues for high-profile sales featuring rare NFTs from the collection—including some from the Degenerate Apes series. These auctions not only boost market interest but also create opportunities for owners seeking liquidity or recognition within elite circles.

Investment Opportunities Through New Projects

The NFT landscape remains dynamic with continuous project launches related to BAYC properties. In recent years—and particularly throughout 2023—the company announced multiple initiatives aimed at expanding its ecosystem:

- Launching new collections that complement existing ones

- Developing metaverse integrations where avatars can interact

- Creating utility-driven tokens linked directly to ownership rights

For Degenerate Ape holders interested in long-term value appreciation—or diversifying their portfolios—these developments present potential investment avenues worth monitoring closely during upcoming industry events.

Risks Facing Community Members: Market Volatility & Regulatory Changes

Despite optimism surrounding future activities, it’s essential for members to remain aware of inherent risks:

Market Fluctuations: The NFT market is known for rapid price swings driven by macroeconomic factors or shifts in investor sentiment.

Regulatory Environment: Governments worldwide are increasingly scrutinizing cryptocurrencies and digital assets; potential policy changes could impact trading capabilities or ownership rights.

Being informed about these risks allows collectors not only to participate actively but also responsibly manage their investments amid evolving legal landscapes.

How To Stay Updated on Future Events

To maximize participation in upcoming activities:

- Follow official channels such as Yuga Labs’ social media accounts (Twitter/X), Discord servers dedicated to BAYC communities.

- Subscribe to newsletters from prominent NFT news outlets covering event announcements.

- Engage actively during online discussions—these often reveal early insights into scheduled meetups or collaborative projects.

- Attend major blockchain conferences where BAYC-related panels might feature updates regarding future initiatives involving Degenerate Apes.

By staying connected through these channels, owners can ensure they don’t miss out on valuable opportunities aligned with their interests within this vibrant ecosystem.

Final Thoughts: What To Expect Moving Forward

As we progress further into 2024, it’s clear that the world of degenerates—and specifically those owning unique NFTs like DeGenerate Apes—is set up for continued growth through diverse events ranging from social gatherings and art exhibitions to strategic partnerships with global brands. While market volatility remains a concern requiring cautious participation by investors—and regulatory landscapes continue evolving—the overall outlook remains optimistic thanks largely due to active community engagement fostered by Yuga Labs' innovative approach toward expanding its ecosystem globally.

For current holders eager not only just enjoy their assets but also leverage them strategically via participating actively at forthcoming events will be crucial — ensuring they stay ahead amidst an ever-changing landscape driven by technological advancements and cultural shifts shaping tomorrow's digital economy.

Keywords: degenerated ape upcoming events | Bored Ape Yacht Club activities | NFT community meetups | Yuga Labs partnerships | crypto art exhibitions | blockchain conferences

JCUSER-F1IIaxXA

2025-05-29 03:16

Are there any upcoming events for Degenerate Ape owners?

Upcoming Events for Degenerate Ape Owners: What to Expect in 2024

Overview of Degenerate Ape and Its Community Engagement

Degenerate Ape is a notable subset within the broader Bored Ape Yacht Club (BAYC) ecosystem, which has become one of the most influential NFT collections since its launch in April 2021. Known for their distinctive, often humorous designs, Degenerate Apes have cultivated a dedicated community of collectors and enthusiasts. These digital assets are more than just images; they represent membership in an active social network that regularly organizes events, collaborations, and investment discussions.

The community's engagement is evident through frequent online meetups on platforms like Discord and Twitter Spaces. These virtual gatherings serve as forums for sharing insights about market trends, upcoming projects, or simply celebrating new drops. Additionally, there have been several in-person meetups where owners can showcase their NFTs and connect face-to-face with fellow collectors—further strengthening community bonds.

Key Upcoming Events for Degenerate Ape Owners

While specific event dates are subject to change based on ongoing developments within the NFT space, several recurring themes highlight what Degenerate Ape owners can anticipate in 2024:

Community Meetups & Social Gatherings:

The community continues to prioritize real-world interactions through organized meetups across major cities worldwide. These events provide opportunities for networking, showcasing rare NFTs, and participating in live discussions about future trends.Collaborative Drops & Exclusive Content:

Yuga Labs frequently partners with brands such as Adidas or Sotheby’s to create exclusive content or limited-edition drops tailored specifically for BAYC members—including those holding Degenerate Apes. Expect upcoming collaborations that could include special merchandise releases or virtual experiences.NFT Art Exhibitions & Blockchain Conferences:

As part of broader industry events focused on blockchain technology and digital art innovation—such as NFT NYC or ETHGlobal—Degenerate Ape owners may find exclusive access or VIP sessions designed around their collection.Investment Seminars & Market Trend Discussions:

Given the volatile nature of NFTs and cryptocurrencies today, many community-led webinars focus on investment strategies amid fluctuating markets. These sessions aim to educate members about maximizing value while navigating regulatory uncertainties.

Strategic Partnerships Impacting Future Events

Yuga Labs’ ongoing collaborations significantly influence upcoming activities relevant to Degenerate Apes owners. Recent partnerships with high-profile brands like Adidas have led to unique digital collectibles and physical merchandise tied directly into the BAYC universe. Such alliances often translate into special events—virtual launches or offline exhibitions—that enhance member engagement while expanding brand visibility.

Furthermore, Yuga Labs’ involvement with major auction houses like Sotheby’s has opened avenues for high-profile sales featuring rare NFTs from the collection—including some from the Degenerate Apes series. These auctions not only boost market interest but also create opportunities for owners seeking liquidity or recognition within elite circles.

Investment Opportunities Through New Projects

The NFT landscape remains dynamic with continuous project launches related to BAYC properties. In recent years—and particularly throughout 2023—the company announced multiple initiatives aimed at expanding its ecosystem:

- Launching new collections that complement existing ones

- Developing metaverse integrations where avatars can interact

- Creating utility-driven tokens linked directly to ownership rights

For Degenerate Ape holders interested in long-term value appreciation—or diversifying their portfolios—these developments present potential investment avenues worth monitoring closely during upcoming industry events.

Risks Facing Community Members: Market Volatility & Regulatory Changes

Despite optimism surrounding future activities, it’s essential for members to remain aware of inherent risks:

Market Fluctuations: The NFT market is known for rapid price swings driven by macroeconomic factors or shifts in investor sentiment.

Regulatory Environment: Governments worldwide are increasingly scrutinizing cryptocurrencies and digital assets; potential policy changes could impact trading capabilities or ownership rights.

Being informed about these risks allows collectors not only to participate actively but also responsibly manage their investments amid evolving legal landscapes.

How To Stay Updated on Future Events

To maximize participation in upcoming activities:

- Follow official channels such as Yuga Labs’ social media accounts (Twitter/X), Discord servers dedicated to BAYC communities.

- Subscribe to newsletters from prominent NFT news outlets covering event announcements.

- Engage actively during online discussions—these often reveal early insights into scheduled meetups or collaborative projects.

- Attend major blockchain conferences where BAYC-related panels might feature updates regarding future initiatives involving Degenerate Apes.

By staying connected through these channels, owners can ensure they don’t miss out on valuable opportunities aligned with their interests within this vibrant ecosystem.

Final Thoughts: What To Expect Moving Forward

As we progress further into 2024, it’s clear that the world of degenerates—and specifically those owning unique NFTs like DeGenerate Apes—is set up for continued growth through diverse events ranging from social gatherings and art exhibitions to strategic partnerships with global brands. While market volatility remains a concern requiring cautious participation by investors—and regulatory landscapes continue evolving—the overall outlook remains optimistic thanks largely due to active community engagement fostered by Yuga Labs' innovative approach toward expanding its ecosystem globally.

For current holders eager not only just enjoy their assets but also leverage them strategically via participating actively at forthcoming events will be crucial — ensuring they stay ahead amidst an ever-changing landscape driven by technological advancements and cultural shifts shaping tomorrow's digital economy.

Keywords: degenerated ape upcoming events | Bored Ape Yacht Club activities | NFT community meetups | Yuga Labs partnerships | crypto art exhibitions | blockchain conferences

Disclaimer:Contains third-party content. Not financial advice.

See Terms and Conditions.

What Is Each Platform’s Approach to Mobile-Web Parity?

Understanding how different digital platforms support and promote mobile-web parity is essential for businesses aiming to deliver consistent user experiences across devices. Each platform—Google, Apple, Microsoft, Mozilla—has its own set of tools, guidelines, and initiatives designed to facilitate this goal. Recognizing these differences helps developers and organizations optimize their websites effectively for all users.

Google’s Role in Promoting Mobile-Web Parity

Google has been a pioneer in advocating for mobile-web parity through various initiatives that influence search rankings and web development standards. Its push toward mobile-first indexing means that Google primarily uses the mobile version of a website for indexing and ranking purposes. This shift emphasizes the importance of having a fully functional, responsive site on mobile devices.

One of Google’s significant contributions is the development of Accelerated Mobile Pages (AMP), which aim to deliver fast-loading content optimized specifically for mobile users. Additionally, Google supports Progressive Web Apps (PWAs), enabling websites to function like native apps with offline capabilities, push notifications, and smooth performance on smartphones. These tools help ensure that websites are not only accessible but also engaging across platforms.

Apple’s Focus on Native Design Guidelines

Apple emphasizes seamless integration between hardware and software through its iOS ecosystem. Its Safari browser supports PWAs but with certain limitations compared to other browsers; nonetheless, Apple encourages developers to adhere to its Human Interface Guidelines (HIG). These guidelines focus heavily on creating intuitive interfaces tailored for iPhone and iPad screens while ensuring accessibility features are integrated.

Recent updates from Apple have reinforced the importance of optimizing web experiences within their ecosystem by providing detailed design recommendations that prioritize touch interactions, fast load times, and visual consistency across devices. While Apple does not directly control web standards as extensively as Google does with search algorithms, it influences best practices through its developer resources aimed at achieving better web performance on iOS devices.

Microsoft’s Support Through Developer Tools

Microsoft's approach centers around supporting universal Windows platform (UWP) applications alongside traditional websites optimized for Edge browser compatibility. With Microsoft Edge adopting Chromium-based architecture similar to Chrome—another major player supporting robust PWA features—the company promotes cross-platform consistency.

Microsoft offers comprehensive developer tools within Visual Studio Code and Azure cloud services that assist in testing responsiveness across multiple device types. Their emphasis lies in ensuring enterprise-level applications can be accessed seamlessly whether via desktop or mobile without sacrificing functionality or security protocols.

Mozilla's Contributions Toward Consistent Web Experiences

Mozilla Firefox champions open standards compliance by encouraging adherence to HTML5/CSS3 specifications vital for responsive design implementation. The organization actively participates in developing web APIs that enhance cross-browser compatibility—a key factor in maintaining uniform experiences regardless of platform choice.

Firefox also supports PWAs robustly by allowing installation directly from the browser interface while emphasizing privacy controls alongside performance enhancements tailored specifically for diverse device environments—including smartphones running Android or iOS via compatible browsers.

Industry Trends Shaping Platform Strategies

Over recent years—especially during 2020–2022—the industry has seen an accelerated push toward achieving true mobile-web parity driven largely by external factors such as the COVID-19 pandemic's impact on digital engagement levels[5]. E-commerce giants like Amazon have invested heavily into optimizing their sites' responsiveness because they recognize poor mobile experiences lead directly to lost sales[6].

Furthermore, major players continuously update their guidelines: Google's enhanced support for PWAs reinforces this trend[3], while Apple's updated design principles emphasize faster load times and better touch interactions[4]. These collective efforts underscore a shared industry understanding: delivering consistent user experience is critical not just from a usability perspective but also from a business growth standpoint.

Implications For Businesses And Developers

For organizations aiming at competitive advantage online—and especially those operating multi-platform digital assets—it is crucial first-to understand each platform's unique approach toward achieving mobility parity:

- Leverage Platform-Specific Features: Use Google's AMP technology where appropriate; adopt Apple's HIG recommendations; utilize Microsoft's developer tools.

- Prioritize Responsive Design: Ensure your website adapts seamlessly across screen sizes using flexible layouts.

- Implement Progressive Web Apps: Take advantage of PWA capabilities supported broadly today—for offline access or push notifications.

- Test Across Devices Regularly: Use emulators or real-device testing environments aligned with each platform's specifications.

By aligning development strategies accordingly—and staying updated with evolving standards—you can provide users with an optimal experience regardless of device type or operating system environment.

Semantic & LSI Keywords:Mobile-responsive websites | Cross-platform compatibility | PWA support | Responsive design best practices | Device-specific optimization | User experience consistency | Web accessibility standards | Browser compatibility tools

This comprehensive understanding underscores why each platform’s approach matters profoundly when striving toward true mobile-web parity—a critical factor influencing user satisfaction , engagement metrics ,and ultimately business success .

JCUSER-WVMdslBw

2025-05-26 19:31

What is each platform’s mobile-web parity?

What Is Each Platform’s Approach to Mobile-Web Parity?

Understanding how different digital platforms support and promote mobile-web parity is essential for businesses aiming to deliver consistent user experiences across devices. Each platform—Google, Apple, Microsoft, Mozilla—has its own set of tools, guidelines, and initiatives designed to facilitate this goal. Recognizing these differences helps developers and organizations optimize their websites effectively for all users.

Google’s Role in Promoting Mobile-Web Parity

Google has been a pioneer in advocating for mobile-web parity through various initiatives that influence search rankings and web development standards. Its push toward mobile-first indexing means that Google primarily uses the mobile version of a website for indexing and ranking purposes. This shift emphasizes the importance of having a fully functional, responsive site on mobile devices.

One of Google’s significant contributions is the development of Accelerated Mobile Pages (AMP), which aim to deliver fast-loading content optimized specifically for mobile users. Additionally, Google supports Progressive Web Apps (PWAs), enabling websites to function like native apps with offline capabilities, push notifications, and smooth performance on smartphones. These tools help ensure that websites are not only accessible but also engaging across platforms.

Apple’s Focus on Native Design Guidelines

Apple emphasizes seamless integration between hardware and software through its iOS ecosystem. Its Safari browser supports PWAs but with certain limitations compared to other browsers; nonetheless, Apple encourages developers to adhere to its Human Interface Guidelines (HIG). These guidelines focus heavily on creating intuitive interfaces tailored for iPhone and iPad screens while ensuring accessibility features are integrated.

Recent updates from Apple have reinforced the importance of optimizing web experiences within their ecosystem by providing detailed design recommendations that prioritize touch interactions, fast load times, and visual consistency across devices. While Apple does not directly control web standards as extensively as Google does with search algorithms, it influences best practices through its developer resources aimed at achieving better web performance on iOS devices.

Microsoft’s Support Through Developer Tools

Microsoft's approach centers around supporting universal Windows platform (UWP) applications alongside traditional websites optimized for Edge browser compatibility. With Microsoft Edge adopting Chromium-based architecture similar to Chrome—another major player supporting robust PWA features—the company promotes cross-platform consistency.

Microsoft offers comprehensive developer tools within Visual Studio Code and Azure cloud services that assist in testing responsiveness across multiple device types. Their emphasis lies in ensuring enterprise-level applications can be accessed seamlessly whether via desktop or mobile without sacrificing functionality or security protocols.

Mozilla's Contributions Toward Consistent Web Experiences